Wireguard Infrastructure

The series Ungoogling my Computing alluded to the use of Wireguard to help secure networking. It might be useful to look at the general structure of the setup more closely, hence a separate post on the subject.

Wireguard is a relatively new VPN technology which owes its breakthrough in the 2020s to its advantages relative to established protocols such as IPsec -- namely; it has a very light codebase, is simple to configure, has only a few moving parts that actually need configuration, uses proven-secure encryption, and is available on practically every relevant computing platform. It is so good in fact that it was adopted into the Linux kernel quite quickly.

A lot of devices based on embedded Linux or *BSD also support the technology, such as pfsense/OPNsense, openWRT, and Mikrotik and other routers built on Linux, some NAS systems, and many Android devices from "smart" TVs to settop boxes and car entertainment head units as long as there is some way to access an app store or sideload APKs.

There are also a number of adjacent technologies and products which we are not going to discuss here but which offer a similar VPN experience with additional features. Those are Zerotier, Tailscale, Nebula, plus a few others. Also several commercial VPN providers have moved to or are supporting Wireguard; for example Mullvad.1

A Note About Tailscale

Tailscale uses Wireguard technology but aims to package it to make it even easier to get up and running. Their comparison page gives a very useful overview of similarities and differences of the respective products/technologies. If you are interested in using Tailscale instead of the manual approach described here you can use their service starting from the free level upwards. Alternatively, the entire codebase of Tailscale is open source with the exception of the coordination server. There is an independent, free implementation of a coordination server called Headscale which means it's possible to self-host a complete Tailnet.

Design Considerations

This is the list of requirements I had when setting this up:

- ubiquitous ad-blocking on all home devices; phones, laptops, media consumption (streaming) devices. Specifically it should cover all aspects, not only the browsers.

- same level of ad-blocking when in a hotel or on the road (via mobile networks)

- secure (off-the-Internet) access to a limited number of self-hosted services; for example a private web server, calendaring server, IMAP, etc.

- secure transport for Internet-bound traffic over untrusted networks such as hotel Wifi

- it should be always-on, simple to configure, and require the lowest possible level of maintenance and potential for breakage

- should provide transparent support for IPv4 and IPv6

One of my "anti-features" is remote access into my house from afar. I know it's a common usecase and it would be fairly straightforward to incorporate but I don't want or need anything to be able to get into my home network remotely. Those things I want to keep an eye on from afar are hosted elsewhere or I have suitable notification mechanism set up. In case something goes wrong I'd much rather have it happen on a VPS than inside my own four walls.

Setup

Here's the general view of the topology:

The hub of the whole system is the VPS on the right. It is a virtual root server that is hosted with one of the large VPS providers and has a static IPv4 addres and a provider-specific IPv6 prefix assigned to it. Every client has a wireguard installation on it, including select VMs running on laptops. The black lines show regular network connectivity, the red lines encrypted Wireguard tunnels. All tunnels terminate on the VPS, hence the "hub" name.

IP Addressing

Wireguard works on the principle of point-to-point connections. In the configurations a peer relationship is established between a local and a remote device. This is called a tunnel because the traffic traveling between the two devices is encrypted using asymmetric cyrptography. Any number of tunnels can be configured to different remote devices. You can but at this stage shouldn't configure multiple tunnels to the same remote device - it can be done and there are use cases for it, but they are outside the scope of this discussion.

In this setup each client device has exactly one tunnel to the VPS server. Inside these tunnels we need to assign IP addresses that create what is called an "overlay network". These are shown in red, so the VPS is 10.7.0.1, the streaming client is 10.7.0.4, etc. We are using the 10.7.0.0/24 network for this, but that is an arbitrary choice.

Shown in green are the mobile devices, laptop and phone, with their IPs. Note that it does not matter in which location they are and how they reach the VPS. They will always keep their 10.7.0.x addresses inside of the tunnel, regardless of what their physical IPs may be.

IPv6

An interesting aspect of tunneling in general is that the IP standard used inside the tunnel is independent from the standard used for the outside transport. In other words, you can transport IPv6 packets inside a tunnel over an IPv4 network, or vice versa. I've omitted the IPv6 configuration from the topology diagram for the sake of simplicity, but it is shown in the configurations below.

Let's look at some example snippets from the configurations.

Client Configs

First the clients:

Linux

# /etc/wireguard/wg0.conf on Fedora laptop

[Interface]

Address = 10.7.0.3/24, fd00:7::3/48

PrivateKey = BASE64KEY

DNS = 10.7.0.1

PostUp = ip route add 192.168.0.0/16 via 192.168.4.1

PostDown = ip route del 192.168.0.0/16 via 192.168.4.1

[Peer]

PublicKey = BASE64KEY

AllowedIPs = 0.0.0.0/0, ::/0

Endpoint = VPS_IP:34700

PersistentKeepalive = 15

A couple of notes here. The address line has IPv4 as well as IPv6 addresses for the tunnel inside interface, meaning both IPv4 as well as IPv6 traffic can transit the tunnel. The VPS_IP under "Endpoint" can be either IPv4 or IPv6, but not both at the same time. This controls how you reach the hub of your Wireguard network.

34700 is the port the Hub server is listening on for incoming connections, and that port must be open in your firewall configuration.

The PostUp and PostDown commands establish routes for my local home network (192.168.0.0/16) to be routed outside of the tunnel directly to my Wifi's gateway. This establishes split tunneling.

Android

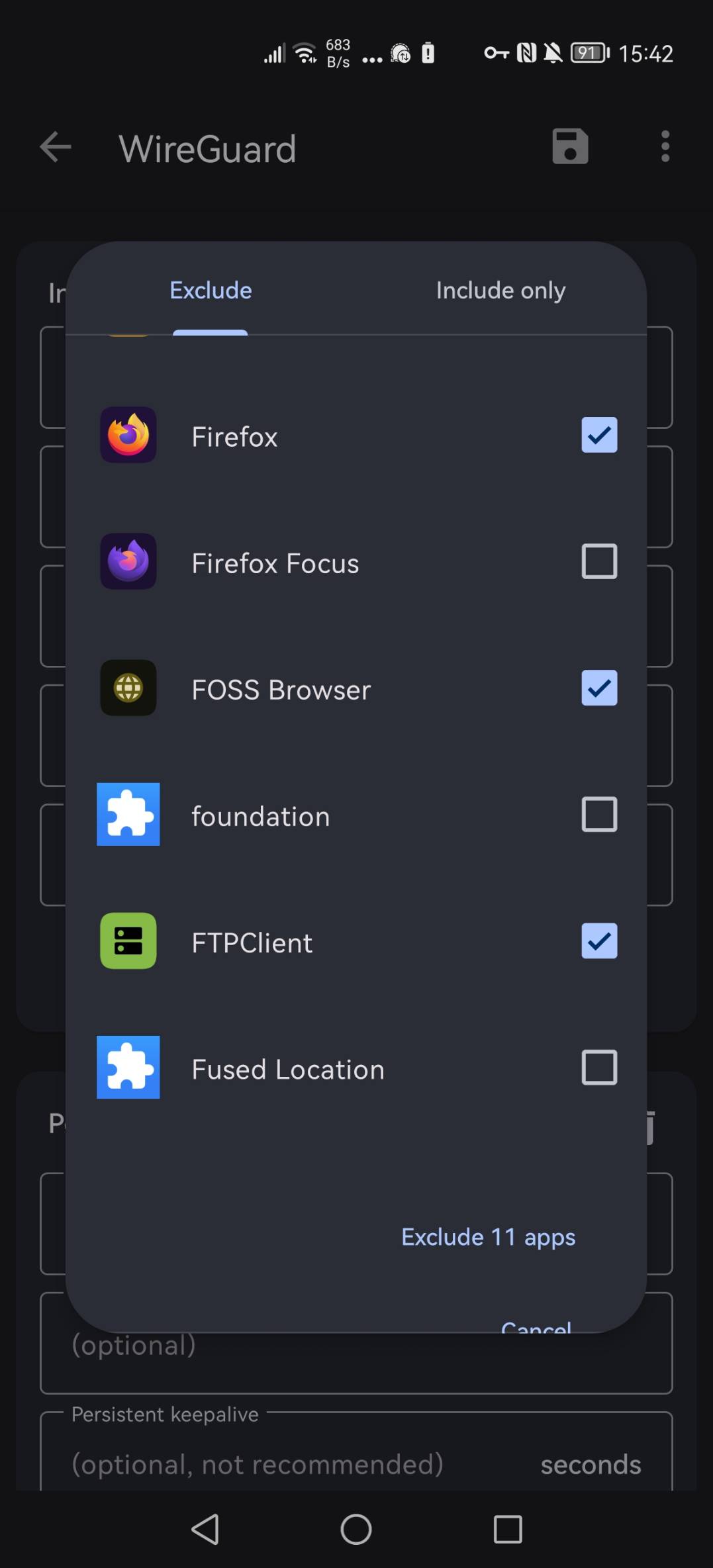

The Wireguard app for mobile is available in Apple's app store and in the F-Droid store for Android. The configuration looks exactly the same just with a GUI. One major additional point is that applications can be excluded to give them split-tunneling access to the local network. Shown here:

the Firefox web browser, FOSS Browser, and FTPclient are marked as excluded so they are able to access resources inside the locally connected Wifi. Which obviously doesn't work while on the road. You can see that I have multiple browsers installed and use them different purposes. Firefox Focus is my main Internet browser since it's pretty locked down.2

The streaming client is also an Android device, so uses the same client setup.

Server Config

This is the server config:

# /etc/wireguard/wg0.conf on the Redhat server

[Interface]

Address = 10.7.0.1/24

Address = fd00:7::1/48

ListenPort = 34700

PrivateKey = BASE64KEY

PostUp = sysctl -w net.ipv6.conf.all.forwarding=1; ip6tables -t nat -A POSTROUTING -o eth0 -s fd00:

7::/48 -j MASQUERADE

# Zenbook

[Peer]

PublicKey=BASE64KEY

AllowedIPs=10.7.0.3/32, 1.2.3.0/24

AllowedIPs=fd00:7::3/128

# Mi Box S

[Peer]

PublicKey=BASE64KEY

AllowedIPs=10.7.0.4/32

AllowedIPs=fd00:7::4/128

# Huawei P40Pro

[Peer]

PublicKey=BASE64KEY

AllowedIPs=10.7.0.5/32

AllowedIPs=fd00:7::5/128

# [...]

It's quite unspectacular, essentially just holding a [Peer] section for every device that wants to participate in the network while only having a single [Interface] section for itself. The hallmark of being the Hub.

Of note: each peer section has two statements defining the allowed IPs for both v4 and v6. These allow the Wireguard kernel module to make decisions about where to route traffic. In the case of the Zenbook you can see how to route multiple subnets of one address family (in this case IPv4) into the same tunnel.

The PostUp statement is somewhat unusual as it turns on IPv6 routing and adds an IPv6 iptables rule that uses masquerading (aka source-NAT) to translate the private fd00:7::x addresses to the provider-specific IPv6 on the outbound interface.3

Interfaces & Routing

Will all this create a mess on the network side? Let's have a look:

# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 96:00:00:24:db:f8 brd ff:ff:ff:ff:ff:ff

inet REDACTED/32 brd REDACTED scope global dynamic eth0

valid_lft 55604sec preferred_lft 55604sec

inet6 2a01:REDACTED::1/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::REDACTED/64 scope link

valid_lft forever preferred_lft forever

96: wg0: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1420 qdisc noqueue state UNKNOWN group default qlen 1000

link/none

inet 10.7.0.1/24 scope global wg0

valid_lft forever preferred_lft forever

inet6 fd00:7::1/48 scope global

valid_lft forever preferred_lft forever

inet6 fe80::a36c:ddab:9ec8:697b/64 scope link flags 800

valid_lft forever preferred_lft forever

# ip r

default via 172.31.1.1 dev eth0

1.2.3.0/24 dev wg0 scope link

10.7.0.0/24 dev wg0 proto kernel scope link src 10.7.0.1

101.33.0.0/16 via 10.7.0.1 dev wg0

169.254.0.0/16 dev eth0 scope link metric 1002

172.31.1.1 dev eth0 scope link

No, quite clean. Specifically, since all clients devices are inside the same subnet of 10.7.0.0/24 there is only a single summary route to dev wg0.4

Traffic Flows

Let's examine the way traffic flows from the laptop through the tunnel out to the Internet:

$ traceroute www.google.com

traceroute to www.google.com (142.250.186.100), 30 hops max, 60 byte packets

1 my.hub.server (10.7.0.1) 20.441 ms 20.422 ms 20.604 ms

2 172.31.1.1 (172.31.1.1) 27.591 ms 27.562 ms 27.563 ms

3 providerhop1 (x.x.x.x) 21.087 ms 21.374 ms 21.328 ms

5 providerhop2 (x.x.x.x) 22.241 ms 22.222 ms 22.183 ms

[...]

9 142.250.160.234 (142.250.160.234) 24.008 ms 72.14.218.176 (72.14.218.176) 24.436 ms 24.416 ms

11 142.250.234.16 (142.250.234.16) 24.683 ms 142.250.226.148 (142.250.226.148) 24.548 ms 142.251.64.186 (142.251.64.186) 26.378 ms

12 142.250.214.191 (142.250.214.191) 24.949 ms 142.250.214.193 (142.250.214.193) 24.918 ms 192.178.109.216 (192.178.109.216) 25.339 ms

13 * * fra24s06-in-f4.1e100.net (142.250.186.100) 24.140 ms

Likewise, IPv6 ping works also (keeping in mind that the Wireguard tunnel operates over my IPv4-only Internet connection):

$ ping6 www.google.com

PING www.google.com (2a00:1450:4001:829::2004) 56 data bytes

64 bytes from fra24s06-in-x04.1e100.net (2a00:1450:4001:829::2004): icmp_seq=1 ttl=115 time=25.5 ms

64 bytes from fra24s06-in-x04.1e100.net (2a00:1450:4001:829::2004): icmp_seq=2 ttl=115 time=24.5 ms

Use Cases

Coming back to the initial requirements:

1 - Ubiquitous Ad Blocking For All Traffic

You can see in the client configurations that the DNS server is set to 10.7.0.1, i.e. the Hub server's tunnel inside IP. Every DNS request will go to that server as long as the tunnel is up.5 Refer to Ungoogling My Computing Part 2 for details of the DNS server setup.

In the case of the streaming device in my home this tunnel setup also bypasses geolocation blocking as the requests to the streaming service are always coming from the VPS' physical IP address. The Android client offers the possibility to configure multiple tunnels to different servers and switch between them with the click of a button, so you can see how this would be useful for subscribers to multiple services from different regions.

2 - Ad Blocking On The Road

We've seen that the tunnel configuration allows for any underlying transport network, including changing between them (such as getting onto a mobile network when leaving the range of your home's Wifi network). As long as the tunnel can be established you'll be covered.

3 - Secure Access To Self-Hosted Services

The VPS in my case runs web, calendering, and IMAP servers. In all cases they are bound to the Wireguard interface, 10.7.0.1, and as such are not accessible except by a device participating in the Wireguard setup.

4 - Secure Transport

This isn't so much of a use case when you are in your home network, but is very valuable while on the road. Someone sniffing the hotel Wifi will only see traffic bound to your VPS' IP address, port 34700, and it's encrypted.

There literally is no performance or other impact so I leave the tunnel up even when at home. Note that it is required to be up if you want to access the private services hosted on the VPS.

5 - Always-on And Simple

It is quite simple to configure as shown above, so covering multiple devices is essentially copy&paste. There really isn't any maintence to spek of.

The always-on criterium is also fulfilled, at least on Android and Linux the service starts reliably and I haven't had a single issue in years of use. I have no experience with other OSes.

Additional Considerations

Some additional comments about the setup.

Security

The security of Wireguard rides on the back of the private keys stored on the participating devices. It's game over should those keys be obtained by a third party Specifically, if your laptop or mobile phone is stolen whoever has it will be able to use the tunnel to infiltrate your network.

Should any device go missing it is absolutely necessary to as quickly as possible remove that node's configuration from the Hub VPS's Peers list.

If you keep any of the configurations in for example Git make very sure the

private keys are excluded. Also set the ownerships and permissions of

/etc/wireguard/ correctly. It's hard to analyze what happens with these

configuration artifacts on mobile OSes, especially when syncing to the "cloud",

so caveat emptor.

Remote Access

I stated above that remote access into my home network was not something I want or need. As you can see from the topology diagram the home server does not participate in the Wireguard overlay network, and it is the only thing running 24x7.

With the shown configurations all traffic between the Wireguard nodes is routed as IP forwarding is on inside the wg0 interface. It would be very straightforward to add the home server to the overlay and thus establish remote access through the tunnels.

It is definitely advisable latest at that point to think about adding a layer of

security to the system. An easy way would be to split the Hub VPS configurations

to use two or more separate wg

A possibly better option would be to create the second Wireguard tunnel to terminate on the Mikrotik firewall instead of the server. This would allow filtering on both ends of the connection and limit incoming traffic to only a few acceptable connections. Note the encrypted traffic in the "red" Wireguard tunnels remains completely opaque to the router.

-

Which is very convenient as it means you can connect to their service from systems without a supported client. This does not, however, change the question whether their services are professionally run and ethically acceptable, and whether or not they keep log data. ↩

-

This offers a nice level of control, but its main reason is to work around the fact that the Android client does not support split tunneling in a similar fashion to what is shown for the Linux client, above. ↩

-

IPv6 purists will be in pain over this. But it is a way to get around the problem that using provider-specific IPv6 address space inside your home network means you will be re-addressing everything in case you want to change your VPS provider. ↩

-

The route to 101.33.0.0/16 blackholes Tencent's network. A pretty drastic measure to reduce incoming probes to your server for a subnet you know you don't ever want to have anything to do with. ↩

-

...as long as every application uses the systemwide-configured DNS server. Some apps and browsers can use DNS-over-TCP or DNS-over-HTTPS. You will notice this when ads get displayed again. See the referenced post for discussion of this unfortunate development. ↩